Machine Learning May Remove Bias from the Hiring Process

Nov 5, 2020

At the premiere Machine Learning Conference (MLConf), November 6, 2020, Arena Analytics’ Chief Data Scientist Patrick Hagerty unveiled a cutting edge technique that removes 92%-99% of latent bias from algorithmic models.

If undetected and unchecked, algorithms can learn, automate, and scale existing human and systemic biases. These models then perpetuate discrimination as they guide decision-makers in selecting people for loans, jobs, criminal investigation, healthcare services, and so much more.

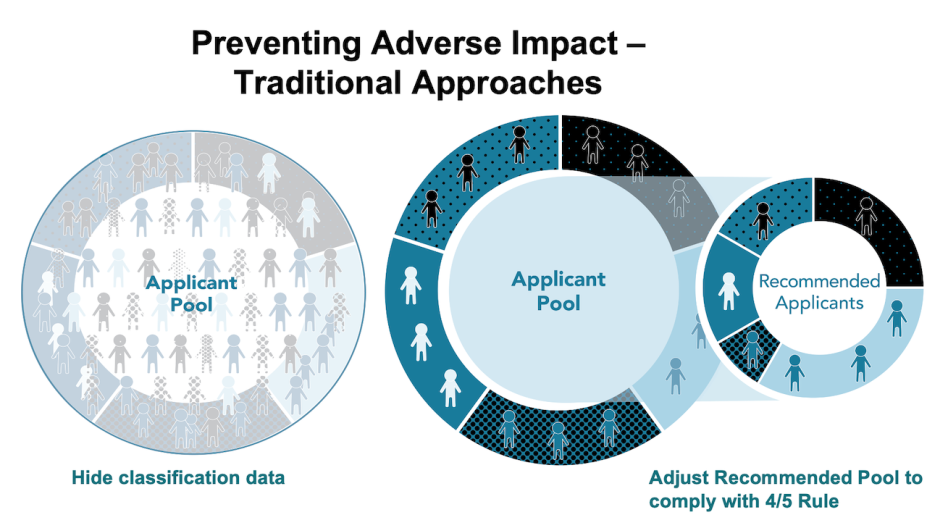

Currently, the primary methods of reducing the impact of bias on models has been limited to adjusting input data or adjusting models after-the-fact to ensure there is no disparate impact.

Excluding all protected data from the inputs of the model may be sufficient for indemnification. However, correlations can persist even when protected data is removed. For example, zip codes can contain information about commute times that can improve a model that predicts worker retention, but that same data point may leak demographic data.

Adjusting models after-the-fact can ensure the protected class performs similarly to other classes. This is how LinkedIN is correcting its ‘predictions.’ This adjusts recommendation rates so that, for example, women are recommended at the same rate as men. Success is measured by statistical significance and a 4/5th rule, as described by the Equal Employment Opportunity Commission.1

For several years, Arena Analytics, like all predictive analytics models, was limited to these approaches. Arena removed all data from the models that could correlate to protected classifications and then measured demographic parity by:

Monitoring the applicant pool’s distribution of protected class data and other characteristics

Comparing these distribution patterns to the applicant pool that was being predicted for success

“These efforts brought us in line with EEOC compliance thresholds,” explains Myra Norton, President/COO of Arena Analytics.

“But we’ve always wanted to go further than a compliance threshold. We’ve wanted to surface a more diverse slate of candidates for every role in a client organization. And that’s exactly what we’ve accomplished, now surpassing 95% in our representation of different classifications."

The Holy Grail in Removing Bias – Adversarial Fairness

The holy grail in algorithmic bias efforts is the removing of all protected information and its correlates without eroding the signal from the input data. This is an impossibly difficult task for a human, but it aligns well with recent developments in AI and Deep Learning called Adversarial Neural Networks. Arena uses the title Adversarial Fairness to describe the leverage of an adversarial network to distill the protected information out of input data.

The notion is to rearrange and embed the input data in a way that produces a strong model but is extremely difficult to find any correlations to protected information.

Chief Data Scientist Patrick Hagerty will explain this at the MLConf.

“Arena’s primary model predicts the outcomes our clients want,” says Hagerty. “Model Two is a Discriminator designed to predict a classification. It attempts to detect the race, gender, background, and any other protected class data of a person. This causes the Predictor to adjust to eliminate correlations with the classifications the Discriminator is detecting.”

Arena trained models to do this until achieving what’s known as the Nash Equilibrium. This is the point at which the Predictor and Discriminator have reached peak optimization.

The Impact of Adversarial Fairness

“When you have a model,” says Hagerty, “You can quantify how good and powerful it is. This is the measure we call the ‘Area Underneath the Curve’ of the Receiver Operator Characteristic, the ROC AUC.”

If a model is producing a random output, the area under the curve (AUC) would be .5. For a perfect predictor, the area under the curve would be 1. If there’s latent information, the discriminator will start somewhere between .5 and 1. The goal is to train down the discriminator to close to .5. It is impossible to get to .5 because there will always be noise in the system.

Through adversarial network training, Arena reduced the discriminator’s ability to discern classified data by 92%-99%, as measured by the change in AUC.

Another way to measure the fairness of Arena’s model is through the more traditional demographic parity metrics. This focuses on the distribution of characteristics in the applicant pool compared to the recommended pool. Although Arena met the 80% compliance threshold for this fairness metric prior to applying adversarial networks, Arena now represents classifications at a rate of 95%+ — often completely mirroring the applicant pool in its recommended pool.

Removing Bias from the Hiring Process

While adversarial networks can ensure Arena’s algorithms do not replicate biases that may have been normalized in past hiring efforts, Arena continues to recommend that organizations address implicit biases that may exist among staff members. In applying Arena’s insights to the hiring process, employers will always include a human decision-maker who can either reject or validate Arena’s AI recommendation. Using a human-in-the-loop model is important for safety and liability reasons, but it also does raise the risk of bias being reintroduced by a human at the very end of the process.

![[object Object]](https://images.ctfassets.net/ucbt0bqomge5/2nzKf6bRTH1GmDmQEzYdZH/19aacd4c27a0cae8ff1b839e66af77c9/Myra_Header_Image.png?w=352&h=240&q=100&fm=png)

![[object Object]](https://images.ctfassets.net/ucbt0bqomge5/5uysxYfKQpvOkMDptgVEGF/350afb6697936dbdf7fd1d6752f30822/still-life-illustrating-ethics-concept-RESIZED.png?w=352&h=240&q=100&fm=png)